Step-by-Step Guide to Setting up Django-RQ

Recently updated on

Modern websites are complicated pieces of machinery. The requests they initiate can be intensive and take more time to complete than a typical HTTP request-response cycle. This is typically refererd to as a blocking request, in that the browser (and user) waits until the response is received. If the request is of sufficient size (e.g. a database query that returns 100,000 lines), the HTTP request will timeout, resulting in an error message.

Job queues solve this blocking problem by allowing tasks to complete asynchronously, outside the request-response cycle. There are several popular job queues, including Celery and RQ. We've selected RQ for the purposes of this post.

Part I: Install Django-RQ in the Project

Set the scaffolding:

settings.py

INSTALLED_APPS =[

...

'django_rq',

...

]

CACHES = {

'default':

'BACKEND': 'redis_cache.cache.RedisCache',

'LOCATION': 'localhost:6379:1',

'OPTIONS': {

'CLIENT_CLASS': 'django_redis.client.DefaultClient',

'MAX_ENTRIES': 5000,

},

},

}

RQ_QUEUES = {

'default':

'USE_REDIS_CACHE': 'default',

},

}

pypi_requirements.txt

django-rq==1.2.0

urls.py

url(r'^django-rq/', include('django_rq.urls')),

Write the code to implement the django_rq queue (in our case it was a caching process):

import django_rq

def update_site_and_page_choices(lang=None):

from django.core.cache import cache

cache.set(SITE_CHOICES_KEY, site_choices)

cache.set(PAGE_CHOICES_KEY, page_choices)

def _update_page_cache(sender, **kwargs):

lang = kwargs.get('lang', None)

queue = django_rq.get_queue('default')

queue.enqueue(update_site_and_page_choices, lang=lang)

post_save.connect(_update_page_cache, sender=Page)

Start the background worker that will pick up the new queue jobs (and keep it running in a separate terminal window):

python manage.py rqworkeer

And voila! Next time your method implementing the queue is used (in our case was on page save) it will add a new job to the queue.

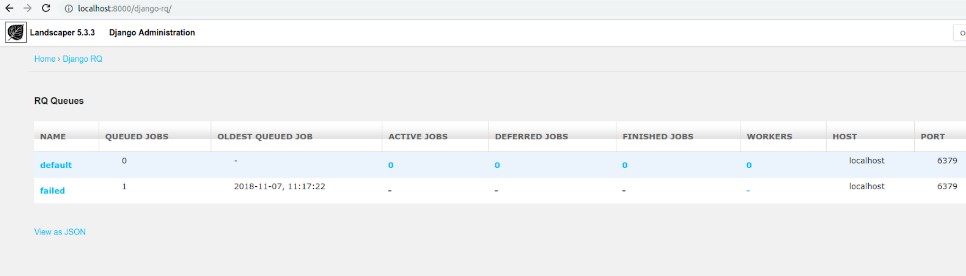

View the processes on localhost: http://localhost:8000/django-rq/

Part II: Daemonize Process on Server

Add a file in /etc/supervisor/conf.d/. e.g.:django_rq.conf. Directory tree:

├── conf.d

│ ├── django.conf

│ ├── django_rq.conf

│ └── nginx.conf

└── supervisord.conf

Edit /etc/supervisor/conf.d/django_rq.conf:

[program:django_rq] ; http://python-rq.org/patterns/supervisor/ command=/srv/sites/project/envs/project/bin/python manage.py rqworker stdout_logfile = /var/log/redis/django_rq.log ; process_num is required if you specify >1 numprocs process_name=%(program_name)s-%(process_num)s ; If you want to run more than one worker instance, increase this numprocs=1 ; This is the directory from which RQ is ran. Be sure to point this to the ; directory where your source code is importable from directory=/srv/sites/project/ ; RQ requires the TERM signal to perform a warm shutdown. If RQ does not die ; within 10 seconds, supervisor will forcefully kill it stopsignal=TERM autostart=true autorestart=true user=project

Activate

sudo supervisorctl #to get in the supervisor prompt supervisor> status django RUNNING pid 30199, uptime 0:48:24 nginx RUNNING pid 32219, uptime 4 days, 14:48:41 supervisor> update supervisor> status django RUNNING pid 30199, uptime 18:47:14 django_rq:django_rq-0 RUNNING pid 31863, uptime 0:05:27 nginx RUNNING pid 31549, uptime 0:07:52

And now the rqworker is running on the server, waiting for tasks and processing them when they get placed in the queue.

of value from this post, would you please take a sec and share it? It really does help.

of value from this post, would you please take a sec and share it? It really does help.